GaussianCity: Generative Gaussian Splatting for Unbounded 3D City Generation

Haozhe Xie, Zhaoxi Chen, Fangzhou Hong, Ziwei Liu

S-Lab, Nanyang Technological University

TL;DR: GaussianCity is a framework for efficient unbounded 3D city generation using 3D Gaussian Splatting.

Abstract

3D city generation with NeRF-based methods shows promising generation results but is computationally inefficient. Recently 3D Gaussian Splatting (3D-GS) has emerged as a highly efficient alternative for object-level 3D generation. However, adapting 3D-GS from finite-scale 3D objects and humans to infinite-scale 3D cities is non-trivial. Unbounded 3D city generation entails significant storage overhead (out-of-memory issues), arising from the need to expand points to billions, often demanding hundreds of Gigabytes of VRAM for a city scene spanning 10km². In this paper, we propose GaussianCity, a generative Gaussian Splatting framework dedicated to efficiently synthesize unbounded 3D cities with a single feed-forward pass. Our key insights are two-fold: 1) Compact 3D Scene Representation: We introduce BEV-Point as a highly compact intermediate representation, ensuring that the growth in VRAM usage for unbounded scenes remains constant, thus enabling unbounded city generation. 2) Spatial-aware Gaussian Attribute Decoder: We present spatial-aware BEV-Point decoder to produce 3D Gaussian attributes, which leverages Point Serializer to integrate the structural and contextual characteristics of BEV points. Extensive experiments demonstrate that GaussianCity achieves state-of-the-art results in both drone-view and street-view 3D city generation. Notably, compared to CityDreamer, GaussianCity exhibits superior performance with a speedup of 60 times (10.72 FPS v.s. 0.18 FPS).

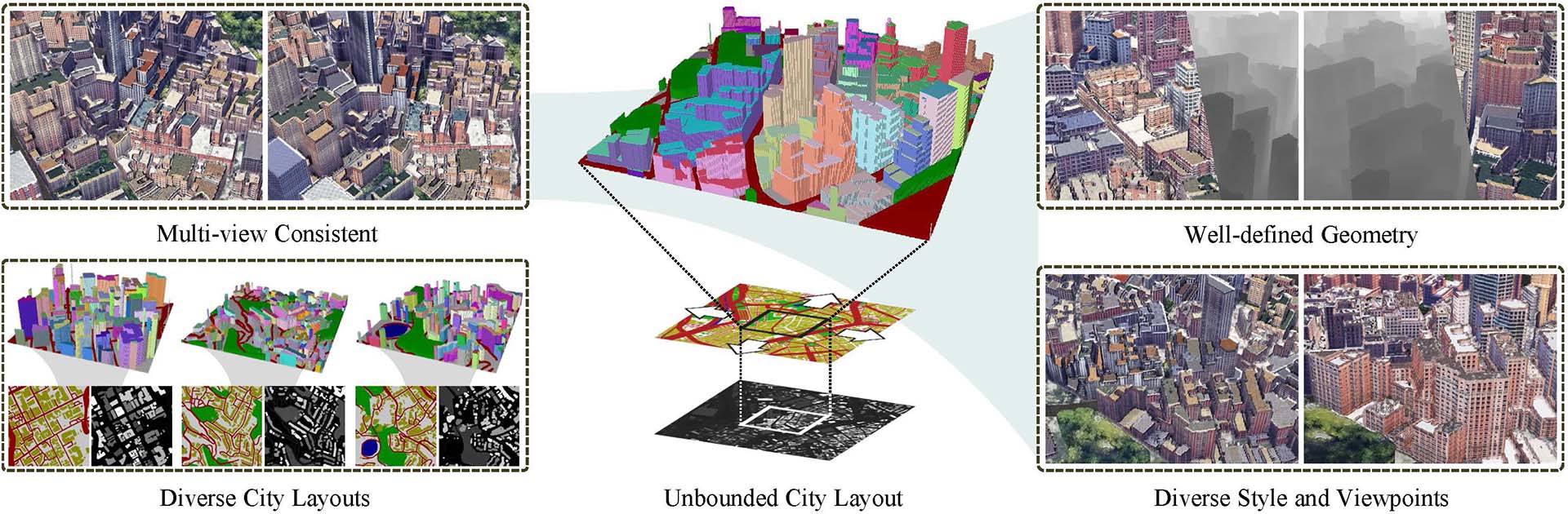

CityDreamer: Compositional Generative Model of Unbounded 3D Cities

Haozhe Xie, Zhaoxi Chen, Fangzhou Hong, Ziwei Liu

S-Lab, Nanyang Technological University

TL;DR: CityDreamer learns to generate unbounded 3D cities from Google Earth imagery and OpenStreetMap.

Abstract

In recent years, extensive research has focused on 3D natural scene generation, but the domain of 3D city generation has not received as much exploration. This is due to the greater challenges posed by 3D city generation, mainly because humans are more sensitive to structural distortions in urban environments. Additionally, generating 3D cities is more complex than 3D natural scenes since buildings, as objects of the same class, exhibit a wider range of appearances compared to the relatively consistent appearance of objects like trees in natural scenes. To address these challenges, we propose CityDreamer, a compositional generative model designed specifically for unbounded 3D cities, which separates the generation of building instances from other background objects, such as roads, green lands, and water areas, into distinct modules. Furthermore, we construct two datasets, OSM and GoogleEarth, containing a vast amount of real-world city imagery to enhance the realism of the generated 3D cities both in their layout and appearance. Through extensive experiments, CityDreamer has proven its superiority over state-of-the-art methods in generating a wide range of lifelike 3D cities.

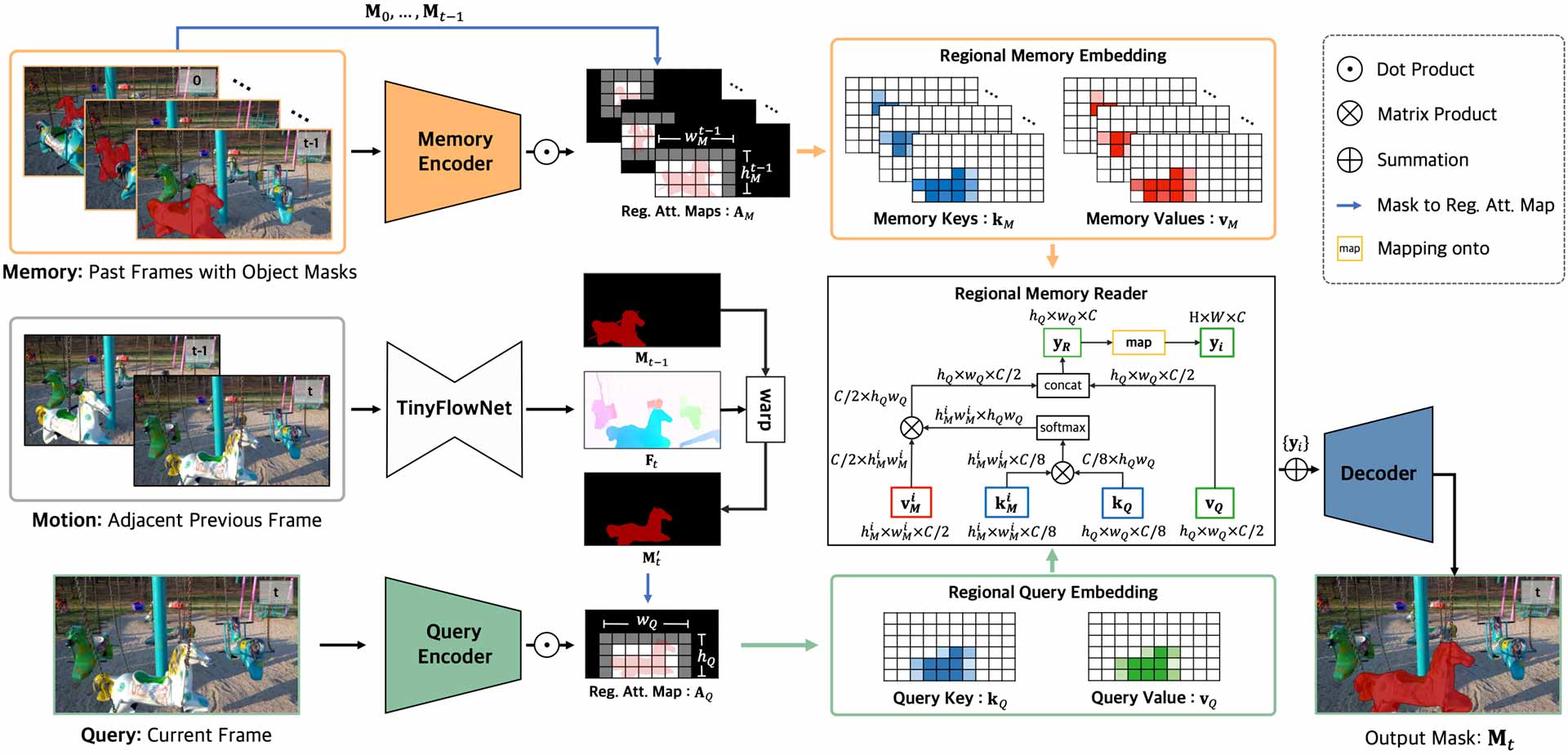

Efficient Regional Memory Network for Video Object Segmentation

Haozhe Xie, Hongxun Yao, Shangchen Zhou, Shengping Zhang, Wenxiu Sun

Abstract

Recently, several Space-Time Memory based networks have shown that the object cues (e.g. video frames as well as the segmented object masks) from the past frames are useful for segmenting objects in the current frame. However, these methods exploit the information from the memory by global-to-global matching between the current and past frames, which lead to mismatching to similar objects and high computational complexity. To address these problems, we propose a novel local-to-local matching solution for semi-supervised VOS, namely Regional Memory Network (RMNet). In RMNet, the precise regional memory is constructed by memorizing local regions where the target objects appear in the past frames. For the current query frame, the query regions are tracked and predicted based on the optical flow estimated from the previous frame. The proposed local-to-local matching effectively alleviates the ambiguity of similar objects in both memory and query frames, which allows the information to be passed from the regional memory to the query region efficiently and effectively. Experimental results indicate that the proposed RMNet performs favorably against state-of-the-art methods on the DAVIS and YouTube-VOS datasets.

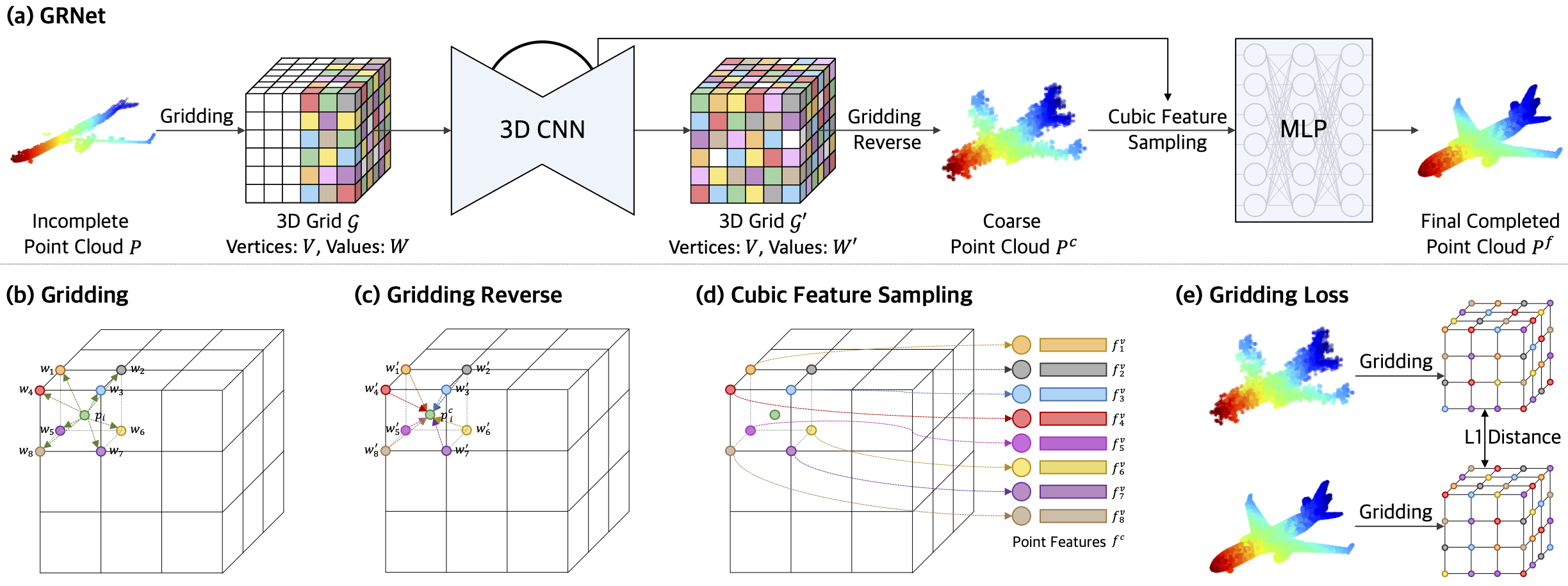

GRNet: Gridding Residual Network for Dense Point Cloud Completion

Haozhe Xie, Hongxun Yao, Shangchen Zhou, Jiageng Mao, Shengping Zhang, Wenxiu Sun

Abstract

Estimating the complete 3D point cloud from an incomplete one is a key problem in many vision and robotics applications. Mainstream methods (e.g., PCN and TopNet) use Multi-layer Perceptrons (MLPs) to directly process point clouds, which may cause the loss of details because the structural and context of point clouds are not fully considered. To solve this problem, we introduce 3D grids as intermediate representations to regularize unordered point clouds. We therefore propose a novel Gridding Residual Network (GRNet) for point cloud completion. In particular, we devise two novel differentiable layers, named Gridding and Gridding Reverse, to convert between point clouds and 3D grids without losing structural information. We also present the differentiable Cubic Feature Sampling layer to extract features of neighboring points, which preserves context information. In addition, we design a new loss function, namely Gridding Loss, to calculate the L1 distance between the 3D grids of the predicted and ground truth point clouds, which is helpful to recover details. Experimental results indicate that the proposed GRNet performs favorably against state-of-the-art methods on the ShapeNet, Completion3D, and KITTI benchmarks.

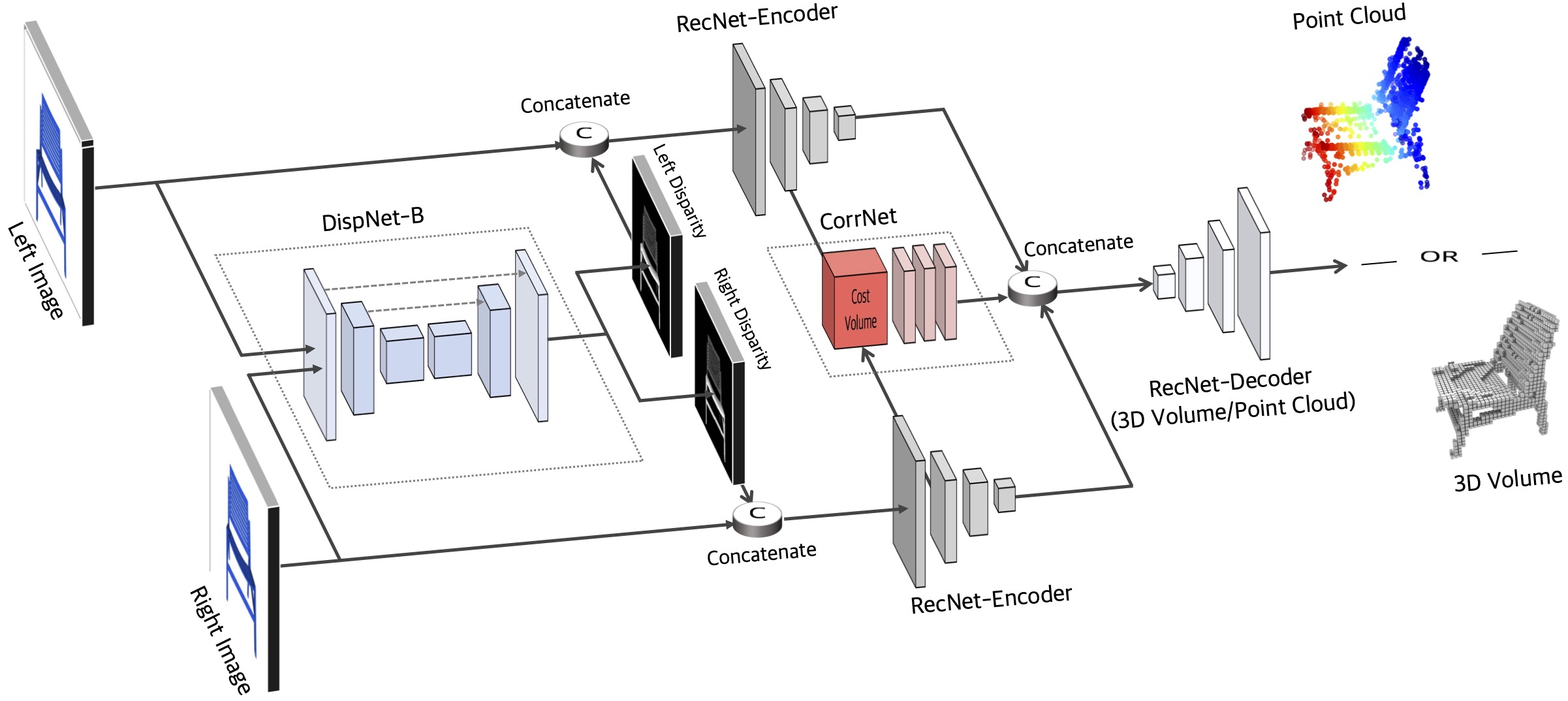

Toward 3D Object Reconstruction from Stereo Images

Haozhe Xie, Hongxun Yao, Shangchen Zhou, Shengping Zhang, Xiaojun Tong, Wenxiu Sun

Abstract

Inferring the complete 3D shape of an object from an RGB image has shown impressive results, however, existing methods rely primarily on recognizing the most similar 3D model from the training set to solve the problem. These methods suffer from poor generalization and may lead to low-quality reconstructions for unseen objects. Nowadays, stereo cameras are pervasive in emerging devices such as dual-lens smartphones and robots, which enables the use of the two-view nature of stereo images to explore the 3D structure and thus improve the reconstruction performance. In this paper, we propose a new deep learning framework for reconstructing the 3D shape of an object from a pair of stereo images, which reasons about the 3D structure of the object by taking bidirectional disparities and feature correspondences between the two views into account. Besides, we present a large-scale synthetic benchmarking dataset, namely StereoShapeNet, containing 1,052,976 pairs of stereo images rendered from ShapeNet along with the corresponding bidirectional depth and disparity maps. Experimental results on the StereoShapeNet benchmark demonstrate that the proposed framework outperforms the state-of-the-art methods.

Pix2Vox: Context-aware 3D Reconstruction from Single and Multi-view Images

Haozhe Xie, Hongxun Yao, Xiaoshuai Sun, Shangchen Zhou, Shengping Zhang, Wenxiu Sun

Abstract

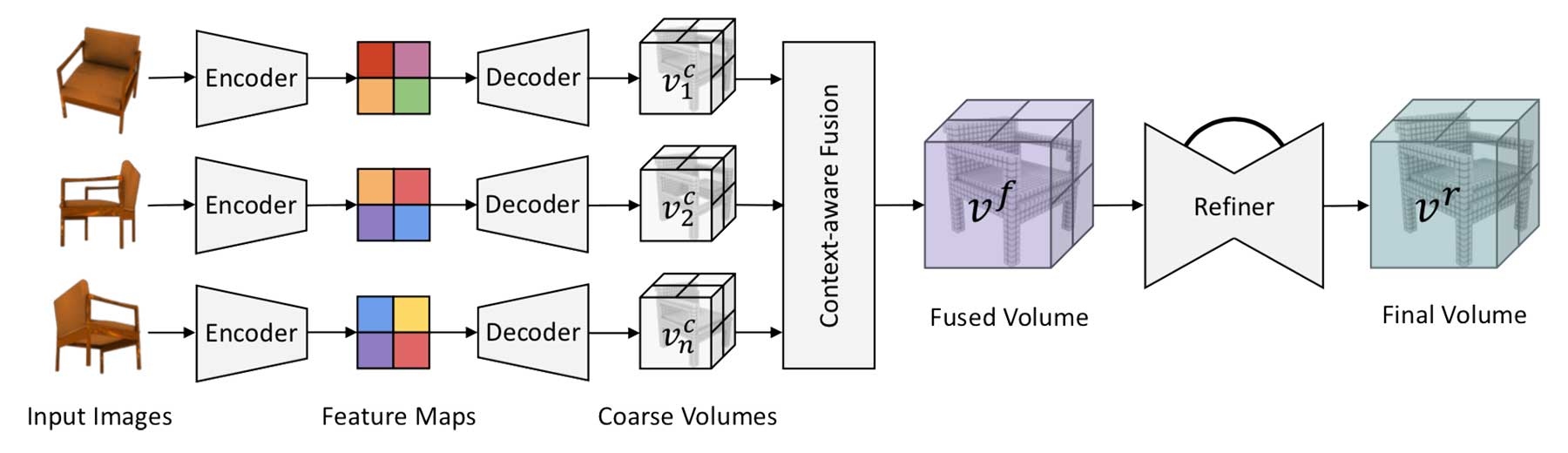

Recovering the 3D representation of an object from single-view or multi-view RGB images by deep neural networks has attracted increasing attention in the past few years. Several mainstream works (e.g., 3D-R2N2) use recurrent neural networks (RNNs) to fuse multiple feature maps extracted from input images sequentially. However, when given the same set of input images with different orders, RNN-based approaches are unable to produce consistent reconstruction results. Moreover, due to long-term memory loss, RNNs cannot fully exploit input images to refine reconstruction results. To solve these problems, we propose a novel framework for single-view and multi-view 3D reconstruction, named Pix2Vox. By using a well-designed encoder-decoder, it generates a coarse 3D volume from each input image. Then, a context-aware fusion module is introduced to adaptively select high-quality reconstructions for each part (e.g., table legs) from different coarse 3D volumes to obtain a fused 3D volume. Finally, a refiner further refines the fused 3D volume to generate the final output. Experimental results on the ShapeNet and Pix3D benchmarks indicate that the proposed Pix2Vox outperforms state-of-the-arts by a large margin. Furthermore, the proposed method is 24 times faster than 3D-R2N2 in terms of backward inference time. The experiments on ShapeNet unseen 3D categories have shown the superior generalization abilities of our method.

Weighted Voxel: a novel voxel representation for 3D reconstruction

Haozhe Xie, Hongxun Yao, Xiaoshuai Sun, Shangchen Zhou, Xiaojun Tong

Abstract

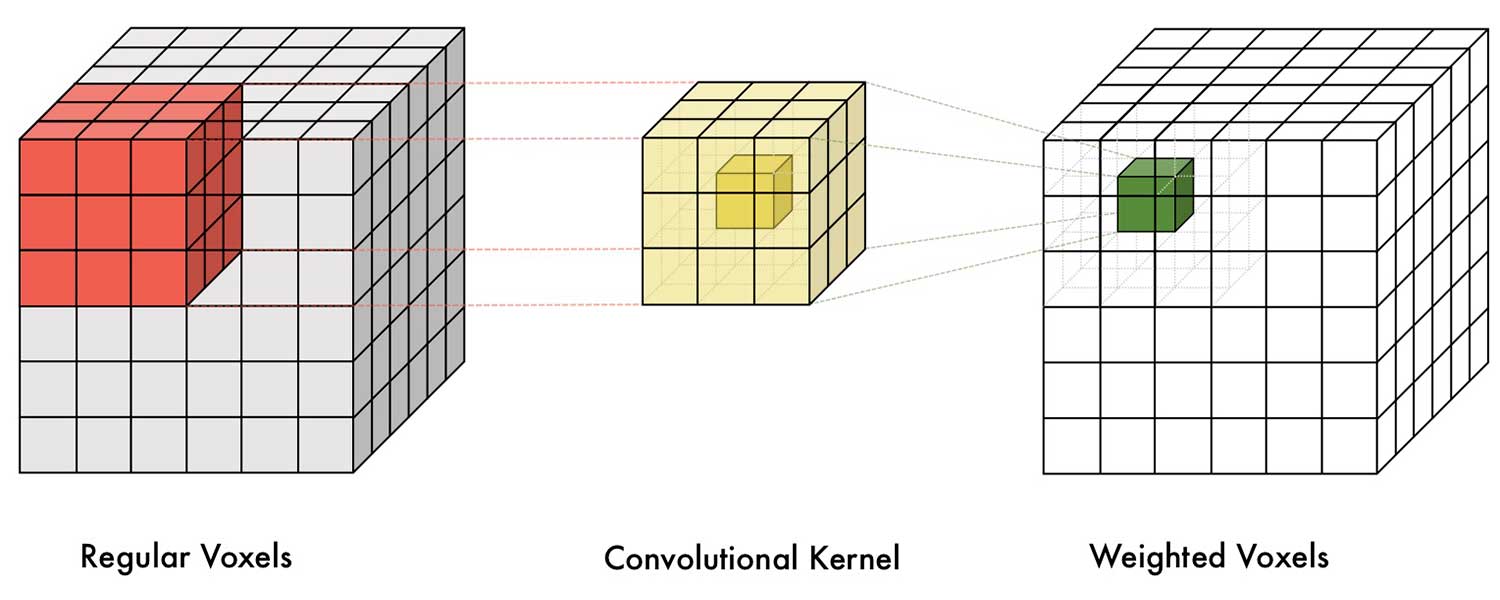

3D reconstruction has been attracting increasing attention in the past few years. With the surge of deep neural networks, the performance of 3D reconstruction has been improved significantly. However, the voxel reconstructed by extant approaches usually contains lots of noise and leads to heavy computation. In this paper, we define a new voxel representation, named Weighted Voxel. It provides more abundant information, facilitating the subsequent learning and generalization steps. Unlike regular voxel which consists of zero-one, the proposed Weighted Voxel makes full use of the structure information of voxels. Experimental results demonstrate that Weighted Voxel not only performs better in reconstruction but also takes less time in training.