GRNet: Gridding Residual Network for Dense Point Cloud Completion

Haozhe Xie, Hongxun Yao, Shangchen Zhou, Jiageng Mao, Shengping Zhang, Wenxiu Sun

Abstract

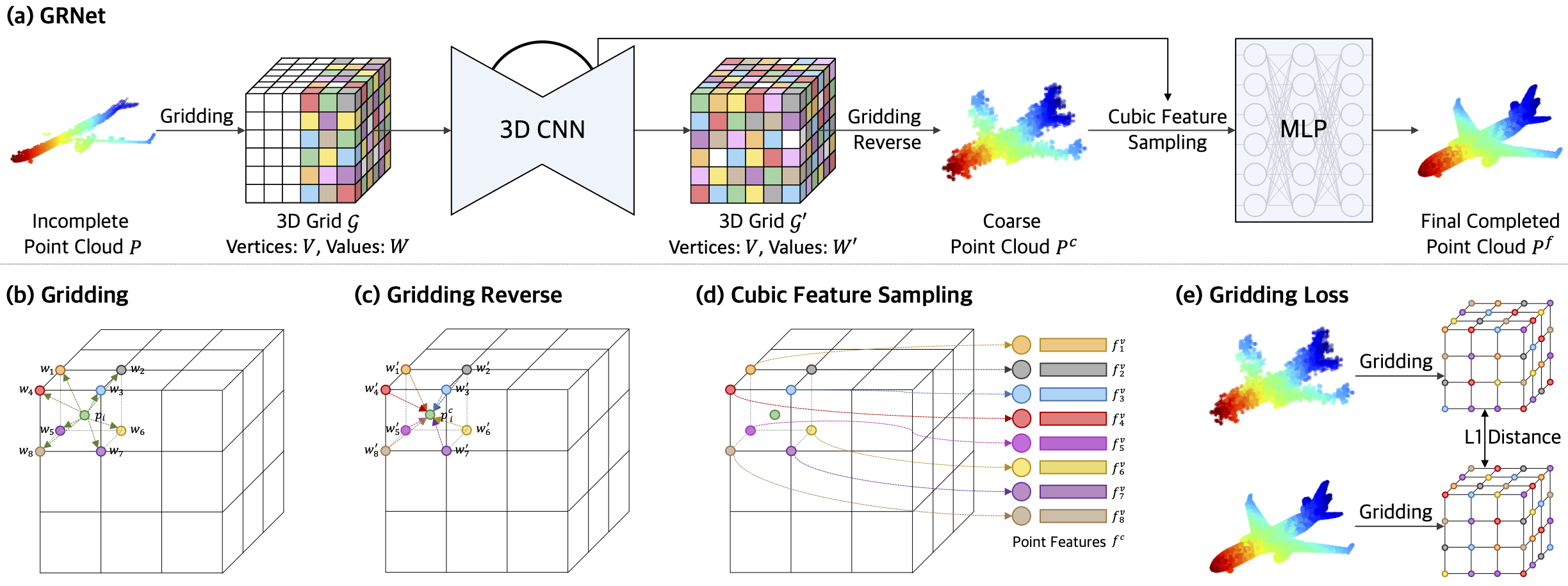

Estimating the complete 3D point cloud from an incomplete one is a key problem in many vision and robotics applications. Mainstream methods (e.g., PCN and TopNet) use Multi-layer Perceptrons (MLPs) to directly process point clouds, which may cause the loss of details because the structural and context of point clouds are not fully considered. To solve this problem, we introduce 3D grids as intermediate representations to regularize unordered point clouds. We therefore propose a novel Gridding Residual Network (GRNet) for point cloud completion. In particular, we devise two novel differentiable layers, named Gridding and Gridding Reverse, to convert between point clouds and 3D grids without losing structural information. We also present the differentiable Cubic Feature Sampling layer to extract features of neighboring points, which preserves context information. In addition, we design a new loss function, namely Gridding Loss, to calculate the L1 distance between the 3D grids of the predicted and ground truth point clouds, which is helpful to recover details. Experimental results indicate that the proposed GRNet performs favorably against state-of-the-art methods on the ShapeNet, Completion3D, and KITTI benchmarks.

Citation

@inproceedings{xie2021efficient,

title={Efficient Regional Memory Network for Video Object Segmentation},

author={Xie, Haozhe and Yao, Hongxun and Zhou, Shangchen and Zhang, Shengping and Sun, Wenxiu},

booktitle={CVPR},

year={2021}

}

Spotlight Video

License

This project is open sourced under MIT license.

The Disqus comment system is loading ...

If the message does not appear, please check your Disqus configuration.