Toward 3D Object Reconstruction from Stereo Images

Haozhe Xie, Hongxun Yao, Shangchen Zhou, Shengping Zhang, Xiaojun Tong, Wenxiu Sun

Abstract

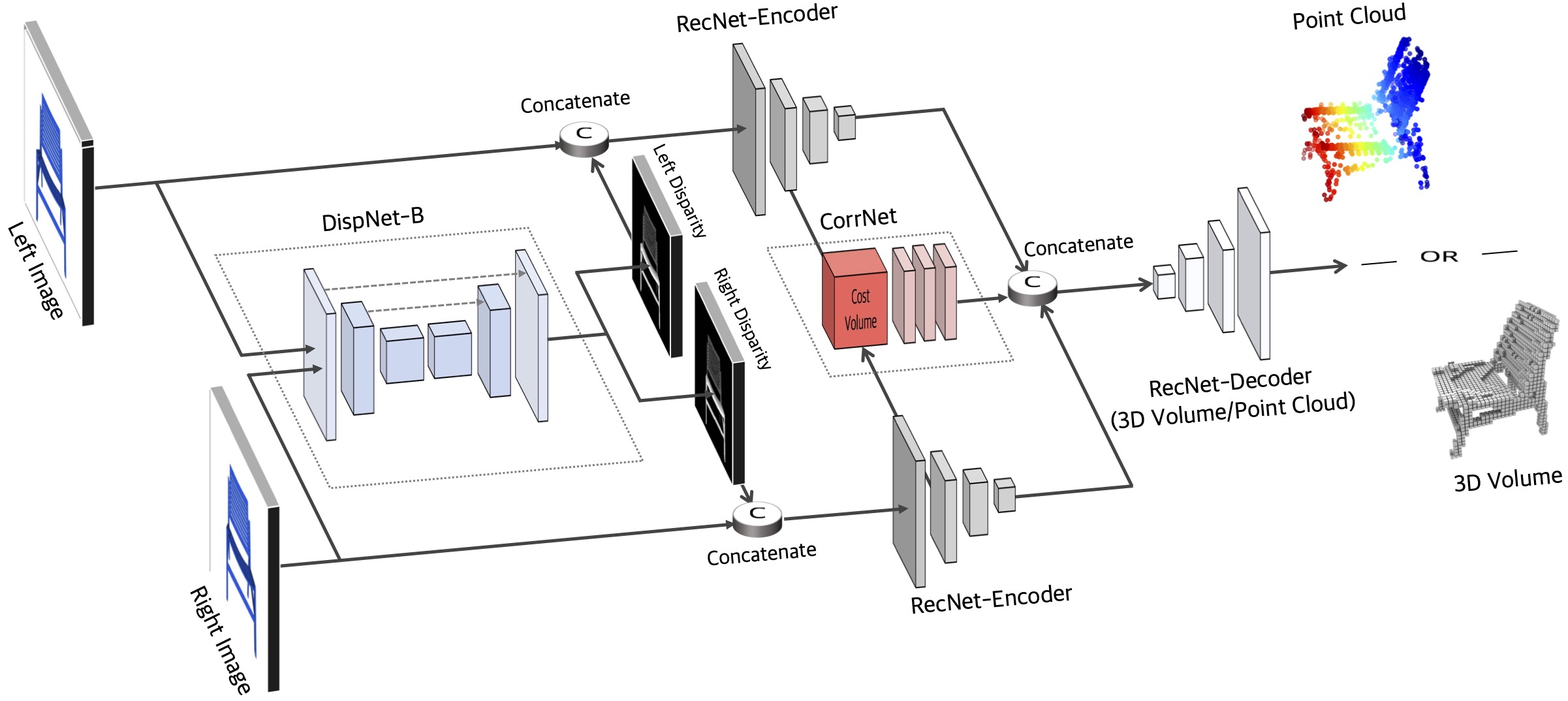

Inferring the complete 3D shape of an object from an RGB image has shown impressive results, however, existing methods rely primarily on recognizing the most similar 3D model from the training set to solve the problem. These methods suffer from poor generalization and may lead to low-quality reconstructions for unseen objects. Nowadays, stereo cameras are pervasive in emerging devices such as dual-lens smartphones and robots, which enables the use of the two-view nature of stereo images to explore the 3D structure and thus improve the reconstruction performance. In this paper, we propose a new deep learning framework for reconstructing the 3D shape of an object from a pair of stereo images, which reasons about the 3D structure of the object by taking bidirectional disparities and feature correspondences between the two views into account. Besides, we present a large-scale synthetic benchmarking dataset, namely StereoShapeNet, containing 1,052,976 pairs of stereo images rendered from ShapeNet along with the corresponding bidirectional depth and disparity maps. Experimental results on the StereoShapeNet benchmark demonstrate that the proposed framework outperforms the state-of-the-art methods.

Citation

@article{xie2021toward,

title={Toward 3D Object Reconstruction from Stereo Images},

author={Xie, Haozhe and Yao, Hongxun and Zhou, Shangchen and Zhang, Shengping and Tong, Xiaojun and Sun, Wenxiu},

journal={Neurocomputing},

volume={463},

pages={444--453},

year={2021}

}

License

This project is open sourced under MIT license.

The Disqus comment system is loading ...

If the message does not appear, please check your Disqus configuration.